For our optophone kit in the Kits for Cultural History series, I’m working on the automated conversion of text into sound. In a common version of the optophone, this conversion was executed by a “tracer.” An operator would use an attached handle to move the tracer from left to right, scanning type as it went.

Image of a tracer from a 1920 issue of Scientific American

Tracers used selenium to not only detect changes in light—for example, the difference between black type and white page—but also translate these differences into electrical signals and then into continuous streams of sounds or silence. As an optophone scanned across a page, an operator interpreted the pattern of sound or silence across time as specific characters or words.

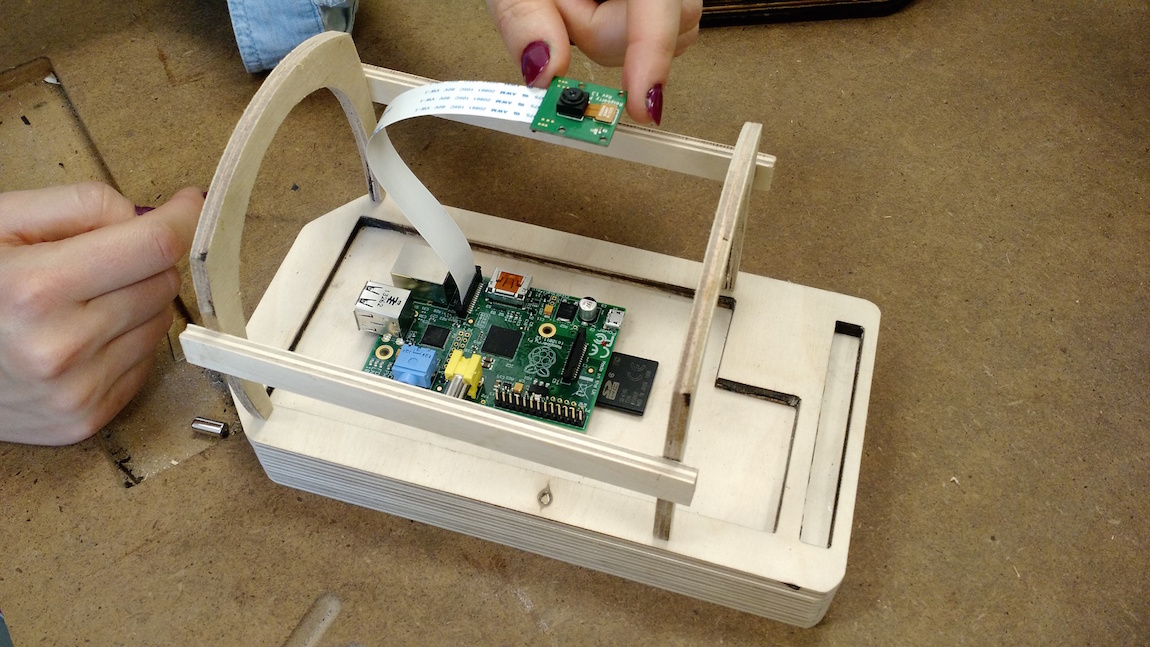

To remake a tracer, I’m using a Raspberry Pi (RPi) and a small camera similar to a laptop camera (see photograph below). The camera takes a picture of the text and passes the image to an optical character recognition (OCR) program, which converts the image into a string of characters (see script).

Optophone Prototype with an RPi

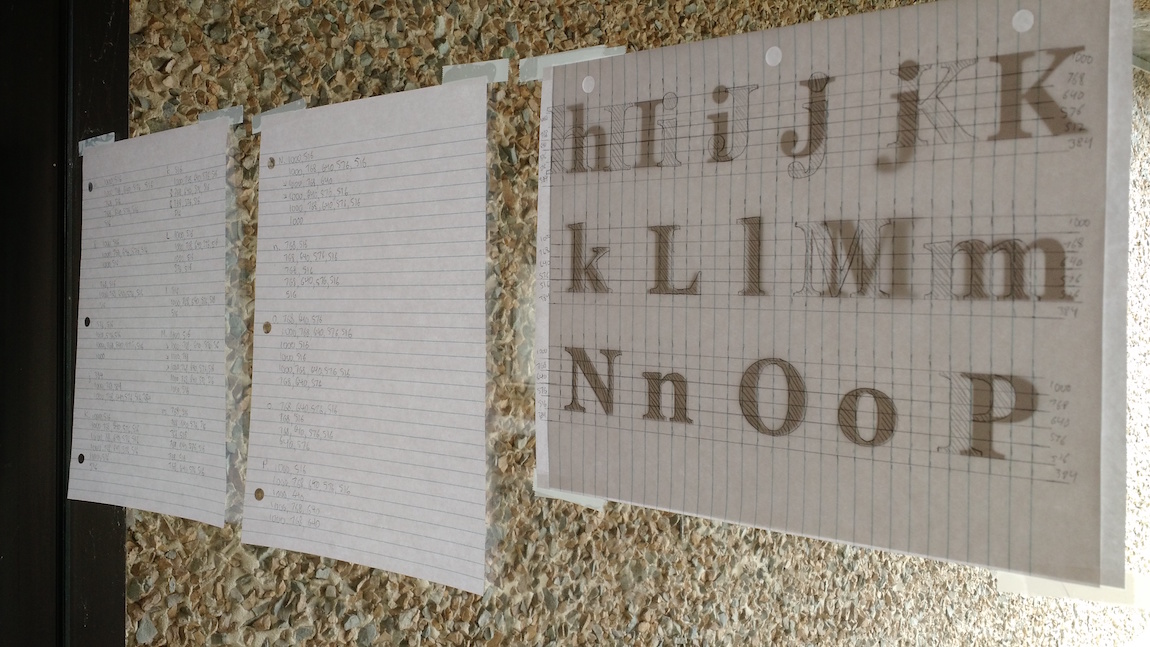

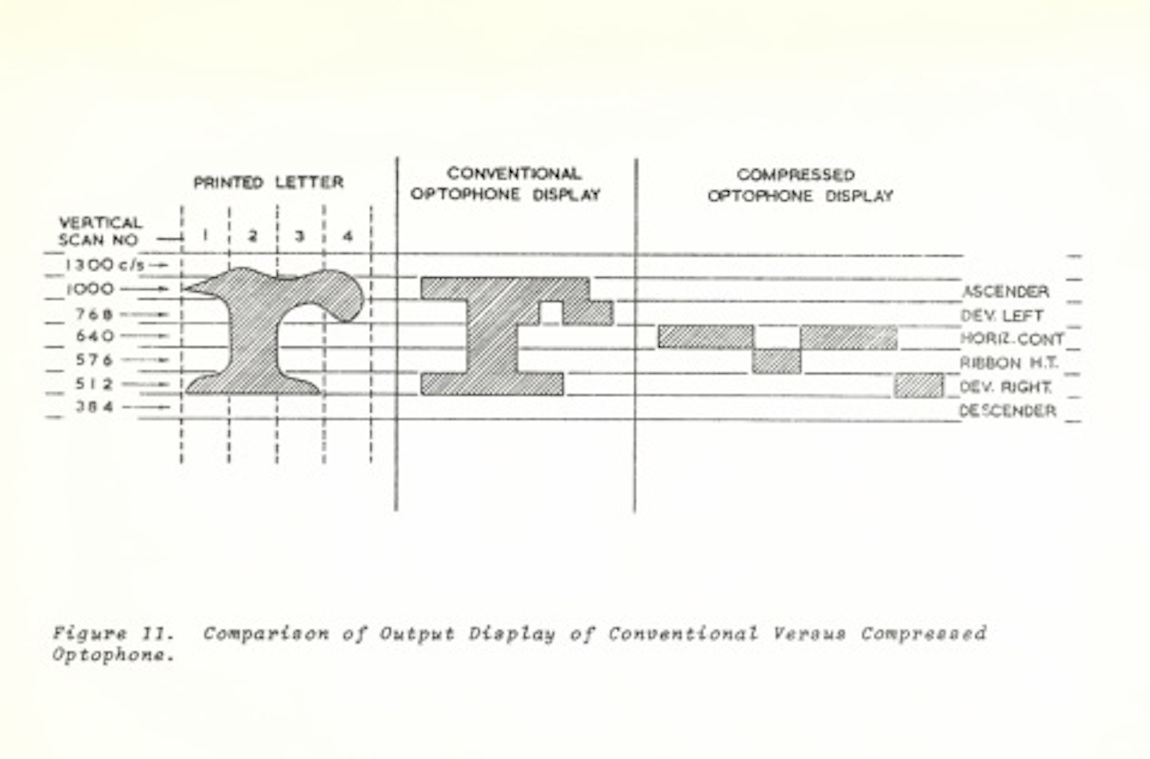

Using the Python programming language, the RPi then matches each character to a sound file in a pre-generated dictionary of sounds (see script). The sounds are played through a pair of headphones connected to the RPi. To make a custom dictionary, I printed a series of letters and common punctuation marks and then traced over them (see first image below) according to Patrick Nye’s diagram (see second image below) of one particular optophone schema. (The schema changed across versions of the optophone.)

Image care of Mara Mills, in “Optophones and Musical Print”

Each part of the letter is keyed to a different frequency, and the pattern of frequencies distinguishes one character from another. In Python, I converted this pattern into tones and then into sound files (see script). See below for a video demonstrating what “Type” may have sounded like according to this optophonic method. The next step is to express an entire page of type as a series of tones.

Post by Tiffany Chan, attached to the KitsForCulture project, with the physcomp and fabrication tags. Featured image care of Tiffany Chan. Thanks to Robert Baker (Blind Veterans UK), Mara Mills (New York University), and Matthew Rubery (Queen Mary University of London) for their support and feedback on this research. Research conducted with Katherine Goertz, Danielle Morgan, Victoria Murawski, and Jentery Sayers.