At the University of Nebraska-Lincoln last week, the Maker Lab and the Modernist Versions Project (MVP) had various opportunities to share their research during Digital Humanities 2013, which was a wonderful event. Special thanks to the Center for Digital Research in the Humanities for being such a fantastic host. The conference was incredibly well organized, and—across all sessions—the talks were thoroughly engaging.

Below is an abstract each for the long paper (“Made to Make: Expanding Digital Humanities through Desktop Fabrication”) and workshop (“From 2D to 3D: An Introduction to Desktop Fabrication”) I conducted in collaboration with Jeremy Boggs and Devon Elliott. The long paper and workshop both engaged the Lab’s desktop fabrication research. Also included is an abstract for the MVP’s short paper on its ongoing versioning research. That talk was delivered by Susan Schreibman on behalf of the MVP and was authored by Daniel Carter, with feedback from Susan, Stephen Ross, and me. Finally, during the Pedagogy Lightning Talks at the Annual General Meeting of the Association for Computers and the Humanities, I gave a very brief introduction to one of the Lab’s most recent projects, “Teaching and Learning Multimodal Communications” (published by the IJLM and MIT Press).

Elsewhere, you can view the slide deck for the “Made to Make” talk, fork the source files for that slide deck, and review the notes from the desktop fabrication workshop, where we also compiled a short bibliography on desktop fabrication in the humanities. To give the papers and workshop some context, below I’ve also embedded some tweets from the conference. Thanks, too, to Nina Belojevic, Alex Christie, and Jon Johnson for their contributions to aspects of the “Made to Make” presentation.

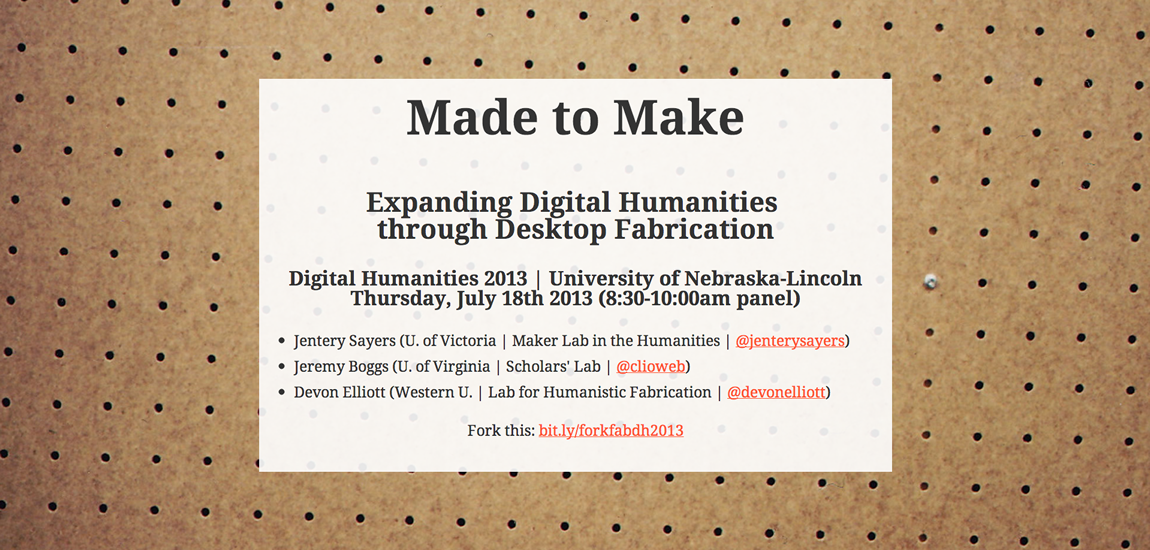

“Made to Make: Expanding Digital Humanities through Desktop Fabrication”

Jentery Sayers, Assistant Professor, English, University of Victoria

Jeremy Boggs, Design Architect, Digital Research and Scholarship, Scholars’ Lab, University of Virginia Library

Devon Elliott, PhD candidate, History, Western University

Contributing labs: Scholars’ Lab (University of Virginia), the Lab for Humanistic Fabrication (Western University), and the Maker Lab in the Humanities (University of Victoria)

Slide deck | Source files

This paper presents substantive, cross-institutional research conducted on the relevance of desktop fabrication to digital humanities research. The researchers argue that matter is a new medium for digital humanities, and—as such—the field’s practitioners need to develop the workflows, best practices, and infrastructure necessary to meaningfully engage digital/material convergence, especially as it concerns the creation, preservation, exhibition, and delivery of cultural heritage materials in 3D. Aside from sharing example workflows, best practices, and infrastructure strategies, the paper identifies several key growth areas for desktop fabrication in digital humanities contexts. Ultimately, it demonstrates how digital humanities is “made to make,” or already well positioned to contribute significantly to desktop fabrication research.

Desktop fabrication is the digitization of analog manufacturing techniques (Gershenfeld 2005). Comparable to desktop publishing, it affords the output of digital content (e.g., 3D models) in physical form (e.g., plastic). It also personalizes production through accessible software and hardware, with more flexibility and rapidity than its analog predecessors. Common applications include using desktop 3D printers, milling machines, and laser cutters to prototype, replicate, and refashion solid objects.

To date, desktop fabrication has been used by historians to build exhibits (Elliott, MacDougall, and Turkel 2012); by digital media theorists to fashion custom tools (Ratto and Ree 2012); by scholars of teaching and learning to re-imagine the classroom (Meadows and Owens 2012); by archivists to model and preserve museum collections (Terdiman 2012); by designers to make physical interfaces and mechanical sculptures (Igoe 2007); and by well-known authors to “design” fiction as well as write it (Bleecker 2009; Sterling 2009). Yet, even in fields such as digital humanities, very few non-STEM researchers know how desktop fabrication actually works, and research on it is especially lacking in humanities departments across North America.

By extension, humanities publications on the topic are rare. For instance, “desktop fabrication” never appears in the archives of Digital Humanities Quarterly. The term and its methods have their legacies elsewhere, in STEM laboratories, research, and publications, with Neil Gershenfeld’s Fab: The Coming Revolution on Your Desktop (2005) being one of the most referenced texts. Gershenfeld’s key claim is that: “Personal fabrication will bring the programmability of digital worlds we’ve invented to the physical world we inhabit” (17). This attention to digital/material convergence has prompted scholars such as Matt Ratto and Robert Ree (2012) to argue for: 1) “physical network infrastructure” that supports “novel spaces for fabrication” and educated decisions in a digital economy, 2) “greater fluency with 3D digital content” to increase competencies in digital/material convergence, and 3) an established set of best practices, especially as open-source objects are circulated online and re-appropriated.

To be sure, digital humanities practitioners are well equipped to actively engage all three of these issues. The field is known as a field of makers. Its practitioners are invested in knowing by doing, and they have been intimately involved in the development of infrastructure, best practices, and digital competencies (Balsamo 2009; Elliott, MacDougall, and Turkel 2012). They have also engaged digital technologies and content directly, as physical objects with material particulars (Kirschenbaum 2008; McPherson 2009). The key question, then, is how to mobilize the histories and investments of digital humanities to significantly contribute to desktop fabrication research and its role in cultural heritage.

To spark such contributions, the researchers are asking the following questions: 1) What are the best procedures for digitizing rare or obscure 3D objects? 2) What steps should be taken to verify the integrity of 3D models? 3) How should the source code for 3D objects be licensed? 4) Where should that source code be stored? 5) How are people responsible for the 3D objects they share online? 6) How and when should derivatives of 3D models be made? 7) How are fabricated objects best integrated into interactive exhibits of cultural heritage materials? 8) How are fabricated objects best used for humanities research? 9) What roles should galleries, libraries, archives, and museums (GLAM) play in these processes?

In response to these questions, the three most significant findings of the research are as follows:

I) Workflow: Currently, there is no established workflow for fabrication research in digital humanities contexts, including those that focus on the creation, preservation, exhibition, and delivery of cultural heritage materials. Thus, the first and perhaps most obvious finding is that such a workflow needs to be articulated, tested in several contexts, and shared with the community. At this time, that workflow involves the following procedure: 1) Use a DSLR camera and a turntable to take at least twenty photographs of a stationary object. This process should be conducted in consultation with GLAM professionals, either on or off site. 2) Use software (e.g., 3D Catch) to stitch the images into a 3D scale model. 3) In consultation with GLAM professionals and domain experts, error-correct the model using appropriate software (e.g., Blender or Mudbox). What constitutes an “error” should be concretely defined and documented. 4) Output the model as an STL file. 5) Use printing software (e.g., ReplicatorG) to process STL into G-code. 6) Send G-code to a 3D printer for fabrication.

If the object is part of an interactive exhibit of cultural heritage materials, then: 7) Integrate the fabricated object into a circuit using appropriate sensors (e.g., touch and light), actuators (e.g., diodes and speakers), and shields (e.g., wifi and ethernet). 8) Write a sketch (e.g., in Processing) to execute intelligent behaviors through the circuit. 9) Test the build and document its behavior. 10) Refine the build for repeated interaction. 11) Use milling and laser-cutting techniques to enhance interaction through customized materials. If the object and/or materials for the exhibit are being published online, then: 12) Consult with GLAM professionals and domain experts to address intellectual property, storage, and attribution issues, including whether the object can be published in whole or in part. 13) License all files appropriately, state whether derivatives are permitted, and provide adequate metadata (e.g., using Dublin Core). 14) Publish the STL file, G-code, circuit, sketch, documentation, and/or build process via a popular repository (e.g., at Thingiverse) and/or a GLAM/university domain. When milling or laser-cutting machines are used as the primary manufacturing devices instead of 3D printers (see step 6 above), the workflow is remarkably similar.

II) Infrastructure: In order to receive feedback on the relevance of fabrication to the preservation, discoverability, distribution, and interpretation of cultural heritage materials, humanities practitioners should actively consult with GLAM professionals. For instance, the researchers are currently collaborating with libraries at the following institutions: the University of Virginia, the University of Toronto, York University, Western University, McMaster University, the University of Washington, and the University of Victoria.

By extension, desktop fabrication research extends John Unsworth’s (1999) premise of “the library as laboratory” into all GLAM institutions and suggests that new approaches to physical infrastructure may be necessary. Consequently, the second significant finding of this research is that makerspaces should play a more prominent role in digital humanities research, especially research involving the delivery of cultural heritage materials in 3D. Here, existing spaces that are peripheral or unrelated to digital humanities serve as persuasive models. These spaces include the Critical Making Lab at the University of Toronto and the Values in Design Lab at University of California, Irvine. Based on these examples, a makerspace for fabrication research in digital humanities would involve the following: 1) training in digital/material convergence, with an emphasis on praxis and tacit knowledge production, 2) a combination of digital and analog technologies, including milling, 3D-printing, scanning, and laser-cutting machines, 3) a flexible infrastructure, which would be open-source and sustainable, 4) an active partnership with a GLAM institution, and 5) research focusing on the role of desktop fabrication in the digital economy, with special attention to the best practices identified below.

III) Best practices: Desktop fabrication, especially in the humanities, currently lacks articulated best practices in the following areas: 1) attribution and licensing of cultural heritage materials in 3D, 2) sharing and modifying source code involving cultural heritage materials, 3) delivering and fabricating component parts of cultural heritage materials, 4) digitizing and error-correcting 3D models of cultural artifacts, and 5) developing and sustaining desktop fabrication infrastructure.

This finding suggests that, in the future, digital humanities practitioners have the opportunity to actively contribute to policy-making related to desktop fabrication, especially as collections of 3D materials (e.g., Europeana and Thingiverse) continue to grow alongside popular usage. Put differently: desktop fabrication is a disruptive technology. Governments, GLAM institutions, and universities have yet to determine its cultural implications. As such, this research is by necessity a matter of social importance and an opportunity for digital humanities to shape public knowledge.

Fab talk about Made to Make: Expanding Digital Humanities through Desktop Fabrication at #DH2013 by @JenterySayers @clioweb @devonelliott

— Geoffrey Rockwell (@GeoffRockwell) July 18, 2013

great ideas in this desktop fabrication talk w/ @jenterysayers @clioweb @devonelliott. they need a whole session. #dh2013

— susan garfinkel (@footnotesrising) July 18, 2013

“From 2D to 3D: An Introduction to Desktop Fabrication”

Jeremy Boggs, Design Architect, Digital Research and Scholarship, Scholars’ Lab, University of Virginia Library

Devon Elliott, PhD candidate, History, Western University

Jentery Sayers, Assistant Professor, English, University of Victoria

Notes from the workshop

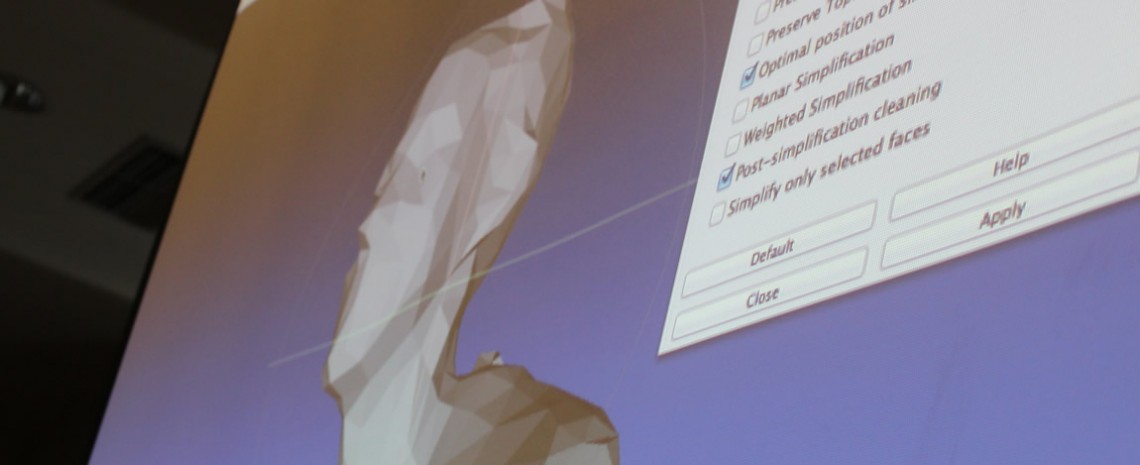

Desktop fabrication is the digitization of analog manufacturing techniques. Comparable to desktop publishing, it affords the output of digital content (e.g., 3D models) in physical form (e.g., plastic). It also personalizes production through accessible software and hardware, with more flexibility and rapidity than its analog predecessors. Additive manufacturing is a process whereby a 3D form is constructed by building successive layers of a melted source material (at the moment, this is most often some type of plastic). The technologies driving additive manufacturing in the desktop fabrication field are 3D printers, tabletop devices that materialize digital 3D models. In this workshop, we will introduce technologies used for desktop fabrication and additive manufacturing, and offer a possible workflow that bridges the digital and physical worlds for work with three dimensional forms. We will begin by introducing 3D printers, demonstrating how they operate by printing things throughout the event. The software used in controlling the printer and in preparing models to print will be explained. We will use free software sources so those in attendance can experiment with the tools as they are introduced.

The main elements of the workshop are: 1) acquisition of digital 3D models, from online repositories to creating your own with either photogrammetry, 3D-scanning technologies, or modelling software; 2) software to clean and reshape digital models in order to make them print-ready and to remove artifacts from the scanning process; and 3) 3D printers and the software to control and use them.

This workshop is targeted towards scholars interested in learning about technologies surrounding 3D printing and additive manufacturing, and for accessible solutions to implementing desktop fabrication technologies in scholarly work. Past workshops have been for faculty, graduate and undergraduate students in the humanities, librarians, archivists, GLAM professionals, and digital humanities centers. This workshop is introductory in character, so little prior experience is necessary, only a desire to learn and be engaged with the topic. Those attending are asked to bring, if possible, a laptop computer to install and run the software introduced, and a digital camera or smartphone for experimenting with photogrammetry. Workshop facilitators will bring cameras, a 3D printer, plastics, and related materials for the event. By the end of the conference, each participant will have the opportunity to print an object for their own use.

@jenterysayers @clioweb @devonelliott Fantastic #DH2013 workshop: laptops full of software, pockets full of plastic, & heads full of ideas.

— Constance Crompton (@CLKCrompton) July 16, 2013

I don’t care how awesome the other workshops may be, we’re having the most fun with @jenterysayers, @clioweb, and @devonelliott. #DH2013

— Brian Croxall (@briancroxall) July 16, 2013

“Versioning Texts and Concepts”

Daniel Carter (Primary Author), University of Texas at Austin

Stephen Ross, University of Victoria

Jentery Sayers, University of Victoria

Susan Schreibman, Trinity College Dublin

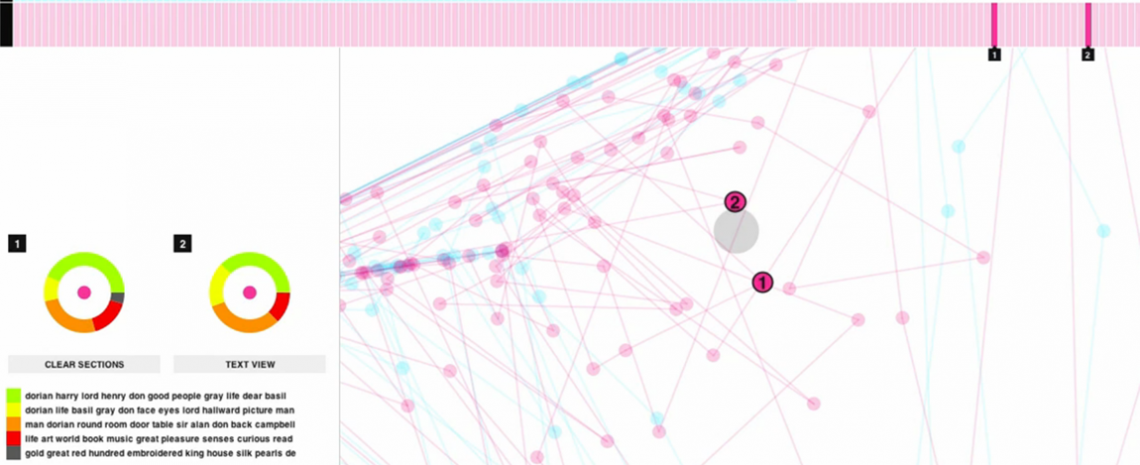

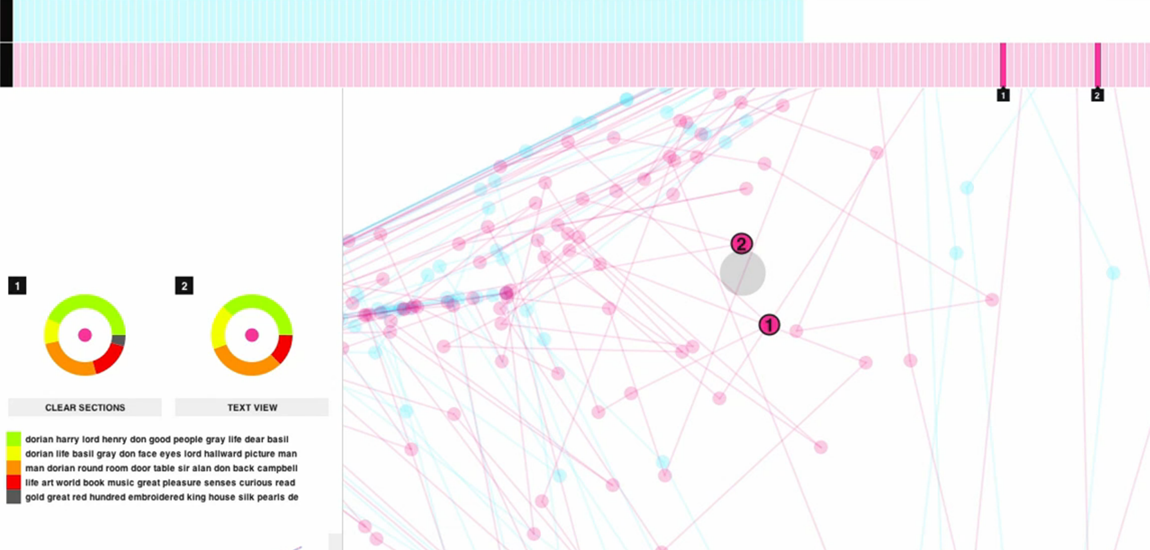

This paper presents the results of the Modernist Versions Project’s (MVP) survey of existing tools for digital collation, comparison, and versioning. The MVP’s primary mission is to enable interpretations of modernist texts that are difficult without computational approaches. We understand versioning as the process through which scholars examine multiple witnesses of a text in order to gain critical insight into its creation and transmission and then make those witnesses available for critical engagement with the work. Collation is the act of identifying different versions of a text and noting changes between texts. Versioning is the editorial practice of presenting a critical apparatus for those changes. To this end, the MVP requires tools that: (1) identify variants in TXT and XML files, (2) export those results in a format or formats conducive to visualization, (3) visualize them in ways that allow readers to identify critically meaningful variations, and (4) aid in the visual presentation of versions.

The MVP surveyed and assessed an array of tools created specifically for aiding scholars in collating texts, versioning them, and visualizing changes between them. These tools include: (1) JuxtaCommons, (2) DV Coll, (3) TEI Comparator, (4) Text::TEI::Collate, (5) Collate2, (6) TUSTEP, (7) TXSTEP, (8) CollateX, (9) SimpleTCT, (10) Versioning Machine, and (11) HRIT Tools (nMerge). We also examined version control systems such as Git and Subversion in order to better understand how they might inform our understanding of collation in textual scholarship. This paper presents the methodologies of the survey and assessment as well as the MVP’s initial findings.

Part of the MVP’s mandate is to find new ways of harnessing computers to find differences between witnesses and to then identify the differences that make a difference (Bateson). In modernist studies, the most famous example of computer-assisted collation is Hans Walter Gabler’s use of the Tübingen System of Text Processing tools (TUSTEP) to collate and print James Joyce’s Ulysses in the 1970s and 1980s. Yet some constraints, such as those identified by Wilhelm Ott in 2000, still remain in the field of textual scholarship, especially where collation and versioning applications are concerned. Ott writes, “scholars whose profession is not computing but a more or less specialized field in the humanities have to be provided with tools that allow them to solve any problems that occur without having to write programs themselves. This leads to a concept of relatively independent programs for the elementary functions of text handling” (97). Indeed, the number of programs available for collation work have proliferated since 2000, including additions to TUSTEP (TXSTEP) as well as the newest web-based collation program, JuxtaCommons.

Accordingly, the MVP has reviewed tools currently available for collation work in order to provide an overview of the field and to identify software that might be further developed in order to create a collating, versioning, and visualization environment. Most of these tools were developed for specific projects, and thus do what they were designed to do quite well. Our question is whether we can modify existing tools to fit the needs of our project or whether a suite of collation and visualization tools needs to be developed from scratch. This survey is thus an attempt to chart the tools that may be useful for the kinds of collation and versioning workflows our team is developing specifically for modernist studies, so we can then test methods based on previous tools and envision future developments to meet emerging needs. Our initial research with Versioning Machine and JuxtaCommons suggests that there is potential for bringing tools together to create a more robust versioning system. Tools such as the Versioning Machine work well if one is working with TEI P5 documents; however, we are equally interested in developing workflows that do not rely upon the TEI, or do not require substantive markup. Finally, we are examining whether version control systems such as Git present viable alternatives to versioning methods now prevalent in textual studies.

Our method adapts the rubric Hans Walter Gabler devised for surveying collation and versioning tools in his 2008 white paper, “Remarks on Collation.” We first assessed the code and algorithms underlying each tool on our list, and we then tested each tool using a literary text. In this particular case, we used two text files and two TEI XML files from chapter three of Joseph Conrad’s Nostromo, which we have in OCR-corrected and TEI-Lite marked-up states from the 1904 serial edition and the 1904 first book edition. During each test, we used a tool assessment rubric (available upon request) to maintain consistent results across each instance. All tests were accompanied by research logs for additional commentary and observations made by our research team.

Our preliminary findings suggest that: 1) Many existing collation tools are anchored in obsolete technologies (e.g., TUSTEP, which was originally written in Fortran, despite having undergone major upgrades, still relies on its own scripting language and front end to operate; also, DV-Coll was written for DOS, but has been updated for use with Windows 7). 2) Many tools present accessibility obstacles because they are desktop-only entities, making large-scale collaborative work on shared materials difficult and prone to duplication and/or loss of work. Of the tools that offer web-based options, JuxtaCommons is the most robust. 3) The “commons” approach to scholarly collaboration is among the most promising direction for future development. We suggest the metaphor of the commons is useful for tool development in versioning and collation as well as for building scholarly community (e.g., MLA Commons). We note the particular usefulness in this regard of the Juxta Commons collation tool and the Modernist Commons environment for preparing digital texts for processing. The latter, under development by Editing Modernism in Canada, is currently working to integrate collation and versioning functions into its environment. 4) Version control alternatives to traditional textual studies-based versioning and visualization present an exciting set of possibilities. Although the use of Git, Github, and Gist for collating, versioning, and visualizing literary texts has not gained much traction, we see great potential in this line of inquiry. 5) Developers and projects should have APIs in mind when designing tools for agility and robustness across time. Web-based frameworks allow for this type of collaborative development, and we are pleased to see that Juxta has released a web service API for its users. 6) During tool development, greater attention must be given to extensibility, interoperability, and flexibility of functionality. Because many projects are purposebuilt, they are often difficult to adapt to non-native corpora and divergent workflows.

Post by Jentery Sayers, attached to the Makerspace, ModVers, and KitsForCulture projects, with the news, fabrication, and versioning tags. Featured images for this post care of Jentery Sayers, Jeremy Boggs, Devon Elliott, Brian Croxall, Daniel Carter, and the Digital Humanities 2013 website.

Pingback: Maker Lab in the Humanities » University of Victoria » The Maker Lab after Two Years()